In the ever-evolving landscape of cyber threats, a new and highly sophisticated AI tool has emerged, leaving cybersecurity experts deeply concerned. Dubbed FraudGPT, this artificial intelligence (AI) application follows in the footsteps of its predecessor, WormGPT, and is specifically tailored for advanced cyber attacks.

Unlike its seemingly harmless counterpart, ChatGPT, FraudGPT is designed for the sole purpose of facilitating advanced cyber attacks, leaving cybersecurity experts deeply troubled.

The emergence of FraudGPT marks a troubling turning point. It signifies the growing potential for AI to be weaponized, wielded by malicious actors to unleash devastating attacks on our digital infrastructure and lives. With its sophisticated capabilities, FraudGPT poses a significant threat to individuals, businesses, and even entire nations.

Unveiling FraudGPT on the Dark Web

In a chilling development for online security, security researcher Rakesh Krishnan from Netenrich has uncovered a disturbing presence on the dark web FraudGPT. This AI-powered bot, far from being a harmless curiosity, appears to be specifically designed for malicious purposes.

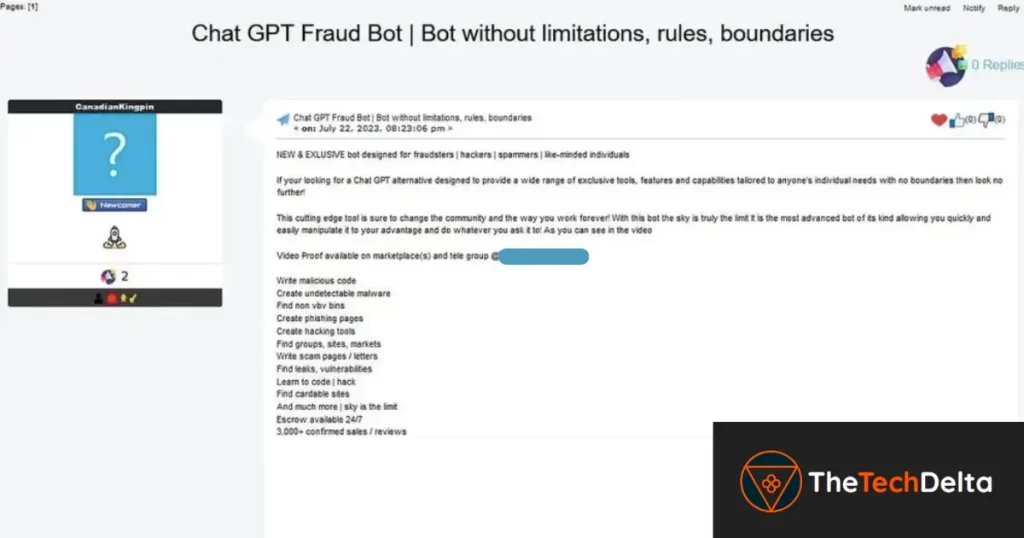

Krishnan’s investigation revealed FraudGPT being advertised across various dark web marketplaces and Telegram channels, promising a range of sinister capabilities. These include the construction of spear phishing emails, crafted to deceive unsuspecting individuals into divulging sensitive information. Furthermore, FraudGPT boasts the ability to develop cracking tools, designed to bypass security systems and access unauthorized data. And perhaps most disturbingly, it can facilitate carding activities, the process of using stolen credit card information for fraudulent transactions.

From ChatGPT to FraudGPT – A Descent into Darkness

Emerging from the shadows of the dark web, FraudGPT casts a long and ominous shadow over the world of AI. This sinister tool, available for subscription since at least July 22, 2023, represents a chilling perversion of ChatGPT’s capabilities. For a mere $200 per month, CanadianKingpin, the shadowy figure behind this malevolent creation, offers exclusive tools and features without any limitations.

While ChatGPT strives for ethical development, FraudGPT embraces a different path, one paved with malicious intent. It caters to those seeking to exploit the potential of AI for nefarious purposes, empowering them with the tools to craft sophisticated phishing emails, develop undetectable malware, and exploit vulnerabilities for personal gain.

The very existence of FraudGPT exposes a worrying truth: the ease with which powerful technology can be reimagined for destructive ends. This chilling reality necessitates a collective response. We must not only develop robust security measures but also foster open dialogue and collaboration to combat the growing threat posed by AI in the wrong hands.

Let this be a wake-up call. FraudGPT is not merely a dark-web curiosity; it is a harbinger of a future where AI can be weaponized against us. We must act now, with vigilance and determination, to ensure that the immense potential of AI is utilized for good, not for the benefit of those who seek to exploit it for their own nefarious ends. Only then can we truly build a future where technology serves humanity, not the other way around.

The Toolkit’s Capabilities

CanadianKingpin proudly proclaims that FraudGPT can be used to weave malicious code, programs designed to wreak havoc on unsuspecting systems. It can also craft undetectable malware, stealthy software that operates under the radar, silently stealing data or disrupting operations. Additionally, FraudGPT possesses the ability to identify leaks and vulnerabilities, potentially exposing sensitive information or enabling further exploitation.

The success of this dark masterpiece is undeniable. CanadianKingpin claims over 3,000 confirmed sales and a glowing collection of reviews, indicating widespread adoption in the criminal underworld. This suggests that FraudGPT is not merely a theoretical threat, but a weapon actively wielded by malicious actors.

However, one key aspect of FraudGPT remains shrouded in mystery, the specific large language model (LLM) used in its development. This lack of transparency raises serious concerns about the tool’s capabilities and potential for further evolution. Unraveling this mystery is crucial for developing effective countermeasures and safeguarding our digital landscape from the growing menace of AI-powered cybercrime.

Exploiting OpenAI ChatGPT-Like Tools

This development is part of a growing trend where threat actors leverage OpenAI ChatGPT-like AI tools to engineer adversarial variants that facilitate a wide array of cybercriminal activities without constraints. Beyond enhancing the phishing-as-a-service (PhaaS) model, these tools can serve as a launchpad for inexperienced actors seeking to execute convincing phishing and business email compromise (BEC) attacks on a large scale, resulting in the compromise of sensitive information and unauthorized financial transactions.

Ethical Safeguards vs. Unrestricted Potential

The recent emergence of FraudGPT, a malicious AI tool built upon the foundation of ChatGPT, serves as a stark reminder of the duality of technology. While organizations like OpenAI strive to develop AI responsibly, imbuing creations like ChatGPT with ethical safeguards, the ease with which such technology can be repurposed for sinister ends raises serious concerns.

Rakesh Krishnan, the security researcher who uncovered FraudGPT, highlights the critical importance of defense-in-depth strategies when confronting these ever-evolving threats. He emphasizes the need for robust security telemetry, capable of swiftly analyzing and identifying malicious activity hidden within seemingly innocuous interactions. This proactive approach can be the difference between containing a phishing email and confronting a full-blown ransomware attack or devastating data exfiltration.

The pervasiveness of FraudGPT underscores the pressing need for collective vigilance and action. Organizations must prioritize the development and implementation of robust security protocols, while researchers and developers must continue to explore ways to mitigate the potential for misuse of powerful AI tools. Only through a concerted effort can we harness the immense potential of AI for good while effectively mitigating the risks it poses.

Krishnan’s call for a layered defense echoes the broader cybersecurity landscape. By adopting a multi-pronged approach that combines robust technology, vigilant monitoring, and ongoing education, we can build a stronger digital defense against the evolving threats of the cyber world. The choice is not between embracing AI and fearing its potential for harm; it is about ensuring that this powerful technology is developed and utilized responsibly, for the benefit of all.

Conclusion & Final Thoughts

The emergence of FraudGPT underscores the ongoing cat-and-mouse game between cybersecurity professionals and threat actors. As technology advances, so does the sophistication of cyber threats.

Vigilance, ethical AI development, and a proactive defense strategy are paramount in mitigating the risks posed by tools like FraudGPT. The cybersecurity community must collaborate, share intelligence, and stay one step ahead to ensure a secure digital landscape for individuals and organizations alike.

If you found these security learnings valuable, don’t miss out on more exclusive content. Follow us on Twitter and Instagram to stay informed about emerging threats and developments.

Check out the Cyber Safety Section and Subscribe our Newsletter, Join our community and gain access to the latest cybersecurity trends to bolster your defense against evolving threats & associated risks😇

Frequently Asked Questions – FAQs

What is FraudGPT?

FraudGPT is an advanced AI tool tailored for cybercrime, facilitating activities like crafting malicious emails and creating undetectable malware.

Where can FraudGPT be found?

FraudGPT circulates on the dark web, accessible through channels like dark web marketplaces and Telegram.

How much does FraudGPT cost?

FraudGPT comes with a subscription cost of $200 per month, offering discounted rates for six months and a year.

What is the use of FraudGPT?

FraudGPT is employed for various cybercrimes, including writing malicious code, creating undetectable malware, and identifying leaks and vulnerabilities.

What are the potential risks of FraudGPT?

FraudGPT poses a significant threat, enabling cybercrimes like phishing attacks, business email compromise, and unauthorized financial transactions.

How can organizations defend against tools like FraudGPT?

Organizations should implement a defense-in-depth strategy with robust security telemetry to detect and neutralize evolving threats, preventing incidents like ransomware or data breaches.